Reading the SQL Server Error Log is important when troubleshooting many issues for SQL Server. Some example issues would be errors related to Always On Availability Groups, long IO occurrence messages, and login failures. Unfortunately, the SQL Server Error Log can be very full of information, making specific things hard to find, … Continue reading How To Read The SQL Server Error Log

SQL Server Developers are under-rated. That’s right! I’m a DBA and I said, “SQL Developers are under-rated.” Dedicated SQL Developers help I.T. teams by writing efficient code that gets just the data that is needed and in a way that leverages how the database engine works best. How do you ensure you’re doing … Continue reading 3 Tips for Writing Good Code as a SQL Developer

You know you need to be thinking about SQL Server security, but maybe you’re not sure where to start. Topics like firewalls and ports and port scanners and such may be dancing your mind. Those are good things to think about, but they are not under your sphere of influence as a data professional in … Continue reading How to Check SQL Server Security Part 1

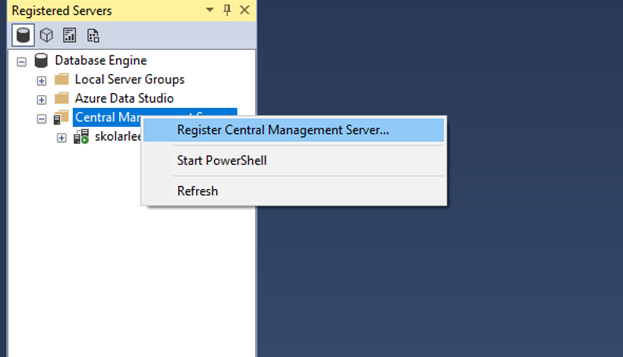

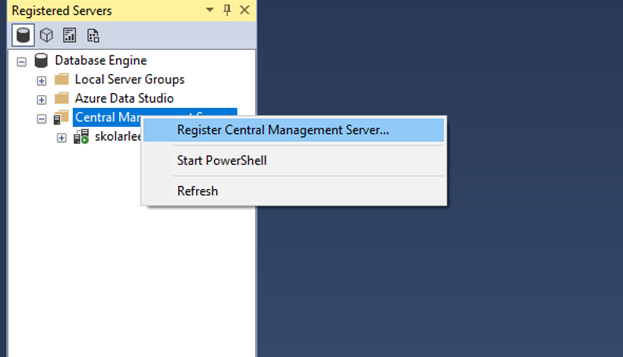

You’re a data professional and you’ve been given the keys to a new SQL Server environment. You know you need to build a SQL Server inventory so you know what is in your environment, but how do you get that information? One of the things I have talked about in other posts is how to … Continue reading How to Build a SQL Server Inventory Using PowerShell

You need to create a SQL Server inventory. Without this information, you’ll be unaware of critical information about the environment your responsible to manage. We’re on a journey to make sure that you as a DBA know what SQL Servers are in your environment so you know what you’re responsible for managing. As your guide, … Continue reading How to Build a SQL Server Inventory Using T-SQL Scripts

I was recently thinking of SQL Server temporal tables and how there is a perspective that you shouldn’t use proprietary features of a product because it locks you into that product. I want to be your guide on this matter. Database platforms are typically long term decisions First of all, that product was … Continue reading Is it ok to use proprietary database features

You’re a data professional learning about managing SQL Server and you’ve been asked to grant permissions for SQL Server to an individual or a group of individuals. What do you need to understand in order to accomplish this? I’ll be your guide to getting started with handling access to SQL Server. Let’s start with an … Continue reading What Are SQL Server Logins and Users

The MAP Toolkit, also called the Microsoft Assessment and Planning Toolkit, is a standard way to obtain information about your Microsoft environment. This includes your SQL Server. The tool is so common that a link to it is included in the SQL Server media. This tool will scan your network and provide a fairly … Continue reading Building a SQL environment inventory with the MAP Toolkit

You’re a data professional and you’re trying to keep up with patching a wide range of SQL Server versions and editions. How do you know what’s in the CU and whether you should apply it or not? My favorite way to read up on CUs is to go to SQLServerUpdates and click around there. … Continue reading Error Messages 8114 or 22122 When Performing Change Tracking Cleanup

You’ve just been hired into a DBA role at a new company, or you’ve been given the DBA keys at your current company. Maybe you’re a SysAdmin and your boss has informed you that you are now supposed to manage the SQL Servers as well as everything else on your plate. In any of … Continue reading Four Areas to Focus on When You Start A New DBA Role